Aserto's cloud agnostic design for running production workloads across cloud providers

May 2nd, 2023

Andrew Poland

DevOps

Earlier this year we migrated the Aserto core production infrastructure from Google Cloud Platform (GCP) to Azure. After pulling this off successfully, we're excited to share the steps we took to make this migration. We’ll also review the principles that made the project a success.

Decision to migrate

For a software organization, time spent reconfiguring infrastructure is often time that we'd rather spend developing new product functionality. So allocating resources to the migration required sufficient business incentive. Our incentive arrived in the form of Microsoft's Startup Founders Hub with its generous Azure cloud credits.

Migration strategy

The Aserto product design process has embraced cloud-native and cloud agnostic design from the start. It uses container deployments orchestrated by Kubernetes as the basic abstractions to our hardware environment.

By embracing devops best practices from day one, our team set itself up to be independent of any environment specific deployment tooling. Our CI build and test process outputs a Docker container, and the CD process launches instances of that container by applying a deployment to Kubernetes. As a result, we only needed to make small changes to this process to migrate the compute workloads to Azure. All we had to do was to specify authentication to the Kubernetes API at a new cloud provider, and begin directing deployments to the new environment.

Preparation

In preparation for migration checklists were created to cover migration requirements, as well as contingency plans to account for unexpected situations that might occur during the move. We decided to pause service and observe a maintenance window during the data transfer portion of the migration to ensure that all transactions were successfully committed to the destination datastores before traffic was directed to the new cluster. This allowed us to have a definite go/no-go point in the migration where we could simply direct traffic back to the old cluster if any failures occurred during the data sync.

Several dry runs were performed during which the team perfected scripted database export/import automations designed to be executed on migration day. This ensured all transactions were securely moved from SQL instances and we wouldn't be running into any surprises when performing these operations for real.

Migration Day

On the day of the move we migrated over a dozen services and 6 different Postgres databases from GCP’s us-central-1 location over to Azure's East US region. The entire process took less than 2 hours and was performed efficiently by executing the prepared scripted automation.

We attribute our success to the design principles the team has stuck to throughout the product's development. These include:

- Leveraging open-source software wherever possible. Aserto is designed around Kubernetes deployments, which can be launched on any platform. Product data is universally stored in PostgreSQL, which can be streamed from and restored to any platform. This helps us avoid using proprietary features from any specific cloud vendor.

- Infrastructure-as-code allows us to always keep our engineering environment in sync with the production environment. This gives us a high degree of confidence that tests we perform in the engineering environment are likely to also succeed when that code makes it to production.

- Most importantly, we have a comprehensive set of integration tests that exercise the critical paths of the application. This makes it easy to make one change at a time and rerun tests to ensure all the lights still turn green and everything is running as expected. It also makes it possible to detect if anything isn’t quite working after the migration. Great tests are fundamental to any migration project, no matter how well prepared it is.

- Iterative migration for the handful of deployments we had that depended on GCP's unique services, such as object storage and scheduler. We were able to continue to point to these services from Azure, and use a staged approach to migrate them to open-source equivalents as time allowed. For example, we are evaluating a Kubernetes deployment of a Temporal scheduler to replace our dependency on GCP’s Cloud Scheduler.

What's left

After we migrated successfully from GCP to Azure there are still a few things left to do, including:

- We relied heavily on GCP’s logging and monitoring, so we need to set up equivalent solutions in Azure. This is one thing that we are currently working on.

- There is also still work to be done to move away from a few of the vendor specific design choices that we made early on.

Conclusion

Having worked at other companies where we discussed having the freedom to move between clouds, this was usually more of an aspiration than reality. It has been a rewarding experience to work through a complete vendor migration and see great design principles pay off.

Migrating an application requires careful planning and execution. Our success proves that the principles and tools available today are mature enough to make it possible to treat cloud computing as a commodity even for complex applications.

Andrew Poland

Senior Reliability Engineer

Related Content

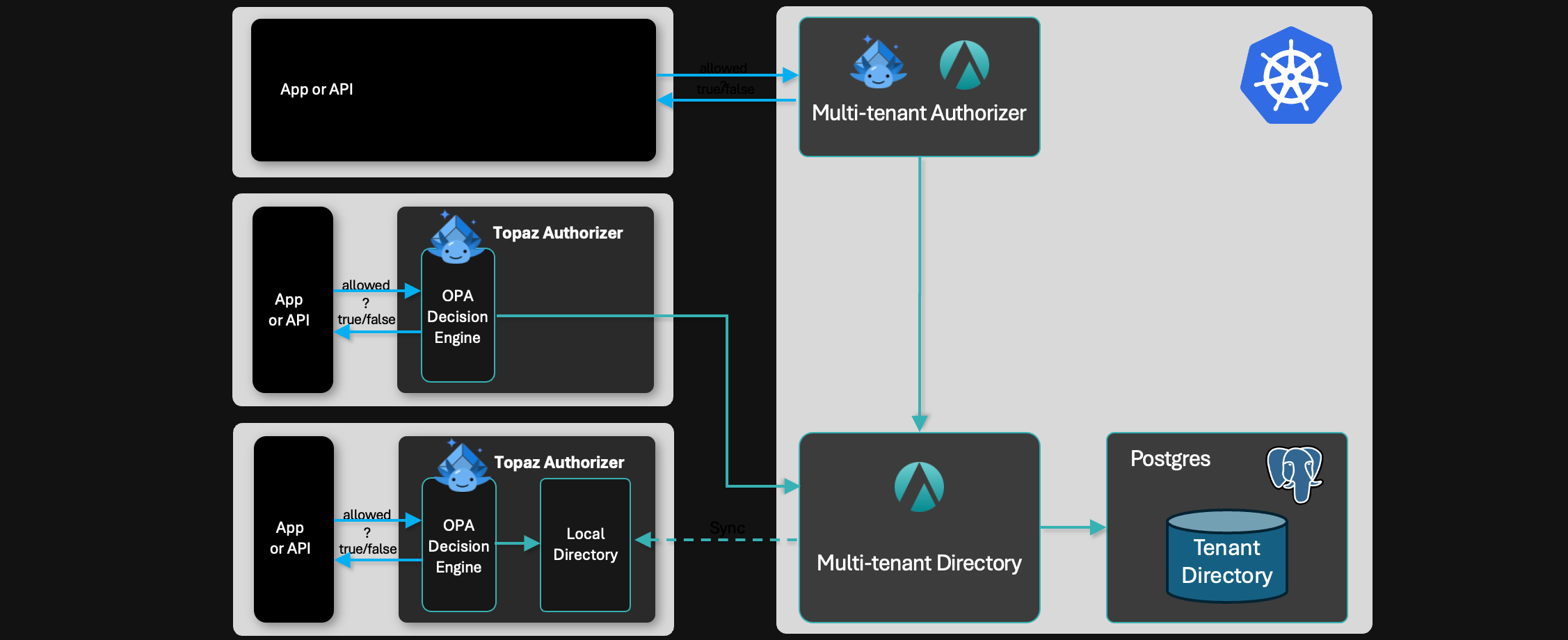

Centralized vs Distributed Authorization

What are the tradeoffs between running a central authorization service versus distributed authorizers?

Jan 24th, 2025

Policy-as-Code for Docker and Kubernetes with Conftest or Gatekeeper

In this post, we'll explore how Aserto uses Policy-as-Code to centrally define our important engineering best practices and share them amongst a diverse set of teams and microservices.

May 12th, 2022

&color=rgb(100%2C100%2C100)&link=https%3A%2F%2Fgithub.com%2Faserto-dev%2Ftopaz)